Spencer Stuart is a global leader in executive search, board and leadership consulting, advising organizations around the world for more than 60 years. Spencer Stuart has 60 years’ experience in leadership consulting. They built a reputation for delivering real impact for our clients — from the world’s largest companies to startups to non-profits. Spencer Stuart is responsible for producing industry and/or functional analysis reports in support of search engagements, internal meetings, and new business initiatives. This includes competitive information, trends across sub-sectors and target company list development.

About Spencer Stuart

Executive Summary

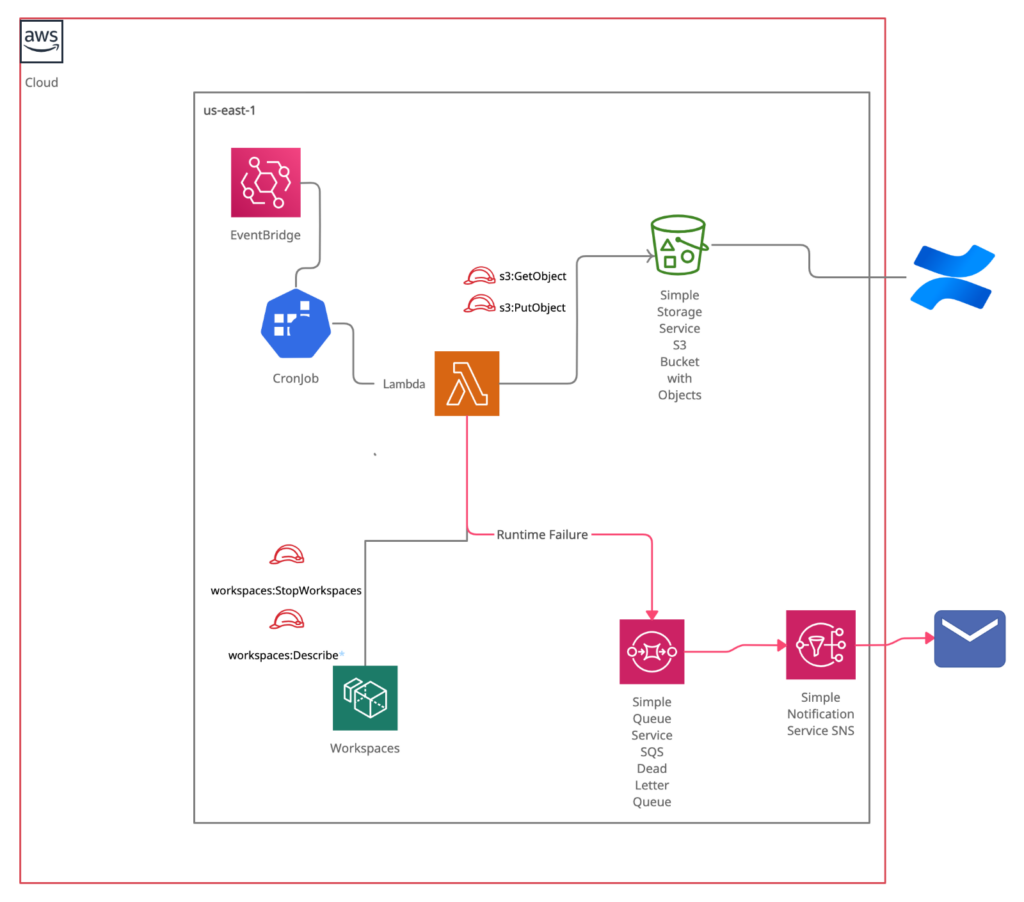

Spencer Stuart’s main objective was to have a serverless automation to detect and free up the workspaces that are not being used by their clients. The requirement includes continuous look up on workspaces in a periodic manner, the architecture should be fault tolerant and secured. Based on the information we get from the workspaces; we must generate reports that will give the broader picture about the resources that are under use by the clients. Our solution should also be able to track and maintain the historical records. We suggested to use lambda functions to do most of the heavy lifting in our architecture along with some other AWS services like S3, Event Bridge, SQS, SNS, etc. With the help of these services, we were able to achieve serverless architecture and leverage its benefits. Using Event bridge with Cron job expressions, we triggered a lambda function to keep an eye on the workspaces that are currently active and those which are not active from past 14 days. After collecting the data, lambda function will take the decisions to terminate the workspaces. During this entire process lambda function interacts with S3 to read and write the data for lookup. To prevent our system from failed lambda executions we have used SQS as a Dead letter queue which gives us the benefit to easily notify and resolve lambda failure issues with minimal downtime.

Challenge

Spencer Stuart had majority of security concerns regarding their data privacy hence they allowed the access to their majority of the applications with AWS workspaces only. But it also raised an increasing concern regarding inactive workspaces, not only they contributed, but the user also had the access to workspaces even after they leave the organization. Due to which company came up with the policy that all the workspaces that have been inactive for more than 14 days shall be deleted. Also, a detailed report of the deleted workspaces needed to be published on confluence so the managers can be intimated in case any false positive is there.

Benefits

- Eradicates the risk of outsiders accessing the confidential data and applications that are only accessible of workspaces.

- Automates the infrastructure and removes the need of manual monitoring

- Saves costs due to inactive but still running workspaces.

- Gives a detailed Analytics of workstation activity

Partner Solution

Spencer Stuart’s main requirement was to create an automated solution-based mechanism which provisions and deletes the workspace according to the inactivity. The required resource deletion needed to be on regular basis without any failure and continuous reporting needed be done

Based on the above requirements we made the use of AWS Lambda to Query about workspaces, S3 bucket for reporting, file hosting and data persistence. Event Bridge to generate cron job and call lambda execution at regular intervals. We made the use of SQS Dead Letter Queue in order to maintain a good disaster management system and retrigger lambda in case of any failure and along with that an SNS subscription to send messages

EventBridge for Cron Jobs

Event Bridge Rules was used to run on a predefined schedule. Using this feature of AWS EventBridge, you can use it as a cron job scheduler- Weekly in our case. EventBridge is a highly efficient and economical solution for projects of all scale, and has very high accuracy, with a latency of just 0.5 seconds.

AWS EventBridge has following advantages over traditional methods for scheduling cron jobs:

- Jobs can be run with AWS Lambda, which uses cloud computation. Thus, performance of servers is not affected at all.

- No polling is required to check for scheduled jobs.

- Scheduling jobs is very easy. AWS provides direct APIs to schedule jobs.

- Jobs which require a lot of time can be executed easily without worrying about overlap.

Reporting and Termination of Workspaces

We made the use of Python 3,7 runtime and AWS sdk Boto3 to describe workspaces and their activity. This would return us the user, email address and last activity of that particular workspace.

After we query out all the workspaces, we used to filter those having more than 14 days of inactivity. We also used to figure out all the workspaces and find the workspaces that are potentially at risk of getting deleted in the next execution and notify the emails regarding the same.

Also, the detailed report CSV file used to be put on S3 bucket which was directly connected to Confluence which provided a list of deleted workspaces and Workspaces potentially can be deleted in the next execution.

Handling pipeline failures.

One of the challenges in the pipeline was handling lambda execution failures, which might be caused due to any reason during runtime. So, we used failure on retries and SQS as a Dead letter queue (DLQ) as a solution for it. Whenever any lambda function fails during execution it will first retry for 3 times to resolve any dependency issues if it still fails on retries, a message including all the details like error statement, timestamp, etc. Will be generated in DLQ which will be configured as a destination of lambda on failure. Later DLQ will be polled from any other service to send notifications with the help of SNS which has already subscribed to those SQS messages.

Deployment and Maintenance

AWS Lambda functions of Python using CodeCommit, CodeBuild, and CloudFormation, AWS SAM templates and orchestrated by CodePipeline.

The infrastructure was mainly put up by CloudFormation and AWS Serverless Application Model templates to put up the serverless code. CodeBuild was used compile source code using this template and output new template file for deploy as CloudFormation stack. Source code was deployed by CodePipeline automatically in two environments – Dev and the Prod as soon as it was merged to the ‘main’ branch.